This is a classic problem with big SQL database restore and regardless of the backup/recovery software you use, you will face the same problem.

What happens is before the actual restore takes place, SQL Server prepares the target database by creating the same set of files as before and initialize them by putting (lots of) zeros inside. The end result is a set of “container” files that have identical size as original but contain nothing until the actual data gets restored.

Depending on the original database size and destination disk’s speed, the process can take a very long time, sometimes longer than 24 hours. During this wait, anything can happen — server crashes, firewall cuts the connection, backup/recovery software cuts the connection and so on.

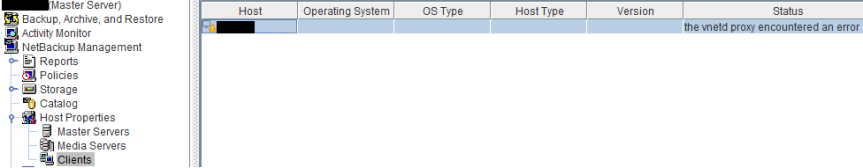

For example, this is what you see in NetBackup’s dbclient log on the target SQL Server:

20:17:56.727 [8500.8292] <4> readTarHeader: INF - requested filename </SQLTEST1.MSSQL7.SQLTEST1.db.MYDATABASE.~.7.004of004.20161204232656..C> matches returned filename </SQLTEST1.MSSQL7.SQLTEST1.db.MYDATABASE.~.7.004of004.20161204232656..C> 20:17:56.727 [8500.8292] <4> readTarHeader: INF - image to restore = 2153 GBytes + 95736624 Bytes 20:17:56.727 [8500.8292] <4> RestoreFileObjects: INF - returning STAT_SUCCESS 20:17:56.727 [8500.8292] <4> DBthreads::dbclient: INF - DBClient has opened for stripe <3> . 20:19:03.707 [7180.7284] <4> StartupProcess: INF - Starting: <"C:\Program Files\Veritas\NetBackup\bin\progress" MSSQL> 20:19:12.594 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:22:12.605 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:25:12.618 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:28:12.632 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:31:12.647 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. . and it goes on until 24 hours later . 20:31:20.089 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:34:20.104 [8500.5604] <4> bsa_JobKeepAliveThread: INF - sent 10 keep alives. 20:36:21.938 [8500.8292] <4> VxBSAGetData: INF - entering GetData. 20:36:21.939 [8500.8736] <4> VxBSAGetData: INF - entering GetData. 20:36:21.939 [8500.7160] <4> VxBSAGetData: INF - entering GetData. 20:36:21.939 [8500.8292] <4> dbc_get: INF - reading 4194304 bytes 20:36:21.939 [8500.8736] <4> dbc_get: INF - reading 4194304 bytes 20:36:21.940 [8500.7160] <4> dbc_get: INF - reading 4194304 bytes 20:36:21.938 [8500.7368] <4> VxBSAGetData: INF - entering GetData. 20:36:21.943 [8500.8292] <4> readFromServer: entering readFromServer. 20:36:21.943 [8500.8736] <4> readFromServer: entering readFromServer. 20:36:21.943 [8500.7160] <4> readFromServer: entering readFromServer. 20:36:21.943 [8500.7368] <4> dbc_get: INF - reading 4194304 bytes 20:36:21.943 [8500.8292] <4> readFromServer: INF - reading 4194304 bytes 20:36:21.943 [8500.8736] <4> readFromServer: INF - reading 4194304 bytes 20:36:21.944 [8500.7160] <4> readFromServer: INF - reading 4194304 bytes 20:36:21.944 [8500.7368] <4> readFromServer: entering readFromServer. 20:36:21.944 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.944 [8500.8736] <2> readFromServer: begin recv -- try=1 20:36:21.944 [8500.7160] <2> readFromServer: begin recv -- try=1 20:36:21.944 [8500.7368] <4> readFromServer: INF - reading 4194304 bytes 20:36:21.944 [8500.7368] <2> readFromServer: begin recv -- try=1 20:36:21.947 [8500.8736] <2> readFromServer: begin recv -- try=1 20:36:21.947 [8500.7160] <2> readFromServer: begin recv -- try=1 20:36:21.950 [8500.7368] <2> readFromServer: begin recv -- try=1 20:36:21.951 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.951 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.955 [8500.8736] <2> readFromServer: begin recv -- try=1 20:36:21.955 [8500.8736] <2> readFromServer: begin recv -- try=1 20:36:21.957 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.957 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.957 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.958 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.958 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.958 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.958 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.959 [8500.8292] <2> readFromServer: begin recv -- try=1 20:36:21.959 [8500.8292] <16> readFromServer: ERR - recv() returned 0 while reading 4194304 bytes on 1340 socket 20:36:21.959 [8500.8292] <16> dbc_get: ERR - failed reading data from server, bytes read = -1 20:36:21.959 [8500.8292] <4> closeApi: entering closeApi. 20:36:21.959 [8500.8292] <4> closeApi: INF - EXIT STATUS 5: the restore failed to recover the requested files

In this case, the workaround is to by pass the “zeroing out” process. The steps are mentioned in https://docs.microsoft.com/en-us/sql/relational-databases/databases/database-instant-file-initialization.

NetBackup for SQL Admin Guide mentions it as well, though not as detailed.